Nearly 25 years after the year 2000 began without the much-hyped possible computer catastrophe, Y2K is enjoying a resurgence as Americans become nostalgic for the late ’90s and early ’00s. The computer cataclysm that never happened is now enjoying a cultural moment, whether as a sight gag in Abbott Elementary, a plot device in The Righteous Gemstones, or as the theme of a new apocalyptic comedy aptly named Y2K. In that movie, a group of teens fight for their lives after Y2K results in a sort of robot-uprising—filmgoers can expect laughs, thrills, ’90s nostalgia (including Fred Durst in his iconic red hat), but most of all catharsis from knowing that the actual Y2K was not nearly as disastrous as Y2K makes it seem.

[time-brightcove not-tgx=”true”]Reflecting on the actual Y2K is a reminder that computing’s real risks have less to do with murderous robots—and far more to do with how reliant we are on poorly maintained lines of code.

Indeed, that’s what made Y2K so novel. The harbinger of doom was not an ancient prophecy or a vast government conspiracy, but rather a straightforward issue with computers. Thus, even amid reassurances from the experts working on Y2K that the problem was being handled, Y2K forced people to acknowledge how dependent they were on oft-unseen computer systems. Ultimately, Y2K was less about the fear that the sky is falling, and more about the recognition that computer systems were holding up the sky.

Y2K emerged out of a basic computing problem: in order to save memory, computer professionals truncated dates by lopping off the century digits: turning 1950 into 50. Wondering how this might become a problem? Consider the following: 1999 minus 1950 equals 49, 99 minus 50 also equals 49; but 2000 minus 1950 equals 50, while 00 minus 50 equals -50. Results like that -50 could lead computers to churn out bogus data or shut down entirely.

Read More: Y2K Sent A Warning. The CrowdStrike Outage Shows We Failed to Heed It

Between the days when that programming decision was made in the middle of the the 20th century and the 1990s, computers went from massive machines that were mostly found at government laboratories and universities into a common feature of many businesses and organizations. By the 1990s, computers were doing everything from processing payroll to tracking what grocery stores had in stock to keeping power plants working. Many in IT knew the date-related issue existed, but for years the assumption had been that someone would come along and take care of it before it was too late. But by 1993, the point at which the IT world began to truly focus on the problem, it became clear that there wasn’t much time left before “too late” arrived.

To sound the alarm, some publications aimed at computer professionals used terms like “doomsday” to raise the stakes—but this was not meant as a premonition of defeat but a call to action. While some in IT described the problem as a dragon, they also emphasized that it was a beast that could be slayed, provided that sufficient time and resources be devoted to the task. This challenge was made all the more difficult by a recognition that large computer-related projects have a tendency to take longer than expected and go over budget. But for all of this evocative terminology, by 1995 most people were just calling the problem Y2K.

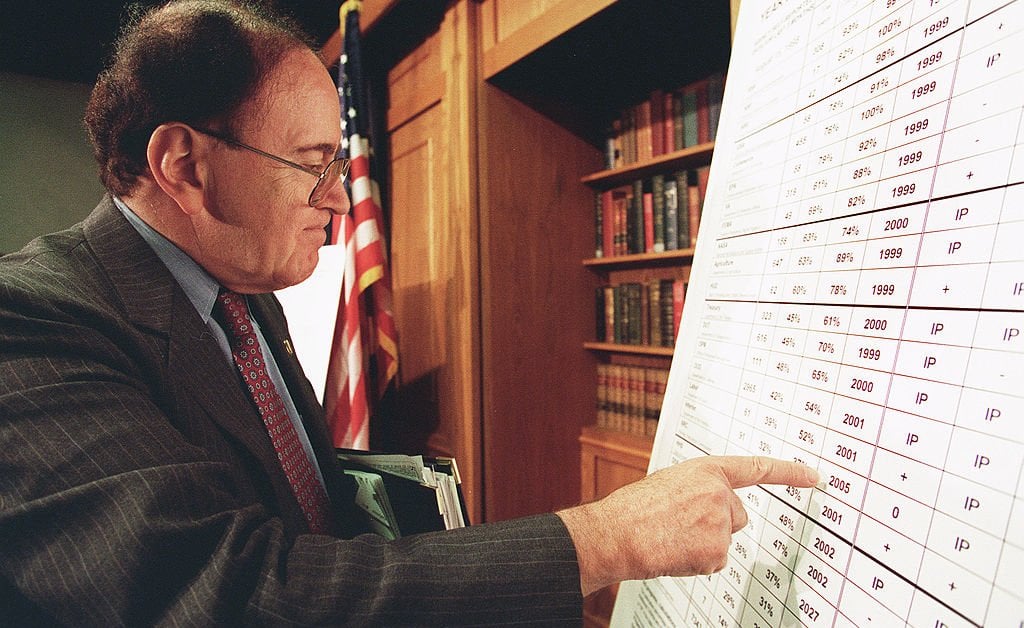

Though occasionally trafficking in hair-raising phrases, those in IT succeeded in raising awareness of the problem, and soon executives and political leaders began to seriously take notice. Pointing to the assessments of “many computer scientists, programmers, and…their managers,” in 1996 the Congressional Research Service described Y2K as “formidable.” Representative Carolyn Maloney, a Democrat from New York City, similarly warned at Congress’s first hearing on Y2K: “When the ball drops in Times Square on New Year’s Eve 1999, let’s make sure the government has not dropped the ball on the year 2000.” Major attention and effort was devoted to the problem with political leaders enacting oversight over the government’s own readiness, while pushing for action in the private sector. Over the next couple of years, numerous meetings took place in the House, the Senate formed a Special Committee on Y2K, and President Clinton had his own Council on Year 2000 Conversion. Meanwhile, organizations like the International Y2K Cooperation Center worked to coordinate Y2K preparedness efforts worldwide.

By 1998, most in the IT sector and government felt confident the problem was well in hand. They had succeeded in making Y2K a priority, and the necessary work had been done to assess, renovate, validate, and implement the necessary programming changes. In its final report before the start of the year 2000, the Senate Special Committee warned about “sensationalists,” arguing that focus on “extremes are counterproductive, and do not accurately reflect what typifies most Y2K problems.” Indeed, by the closing months of 1999, the consensus in the IT sector and government was that Y2K would be a bump in the road, with disruptions no worse than a winter storm. And they were right.

Read More: Doomsday Clock Says Humanity Is as Close as Ever to Destruction

But at the same time, public interest intensified. Case in point, TIME’s Jan. 18, 1999, cover story on Y2K warned of “THE END OF THE WORLD!?!” alongside an image of computers literally raining from the sky. The article focused heavily on covering those preparing for the worst, rather than listening to those who were trying their best to fix the problem. Similarly, in October of 1999, the poor residents of Springfield found themselves dealing with a Y2K apocalypse thanks to Homer Simpson having been put in charge of Y2K preparedness at Springfield’s nuclear power plant.

In 1999, Y2K: The Movie premiered, with planes falling from the sky and nuclear meltdowns. When it came out, the film was blasted for stoking fear and exploiting sensationalism. “Y2K experts” noted in Computerworld that the film “misses the big picture” and “trivializes the whole problem.” Those working on Y2K were confident that Y2K wasn’t going to look anything like the made-for-TV-movie version of it. But as one 1999 “Y2K and You” pamphlet from the President’s Council on Year 2000 Conversion explained, “the large number and inter-connectivity of computers we depend on every day make Y2K a serious challenge.” The root of the anxiety surrounding Y2K, and perhaps its enduring resonance, is a recognition of precisely this dependency—which has only grown over time.

When we worry about Y2K today—and as the new Y2K movie makes clear, we still do—we aren’t worrying about how Y2K threatened to cause the computers to crash then—we’re trying to reassure ourselves that the computers we are so reliant on won’t crash now.

Perhaps it’s inevitable for a major crisis that is successfully averted to become fodder for a comedy. And while it is hard to learn history from an apocalyptic horror comedy, that is preferable to needing to learn history from a tragedy.

Zachary Loeb is an assistant professor in the history department at Purdue University. He works on the history of technology, the history of disasters, and the history of technological disasters. He is currently working on a book about the year 2000 computer problem (Y2K).

Made by History takes readers beyond the headlines with articles written and edited by professional historians. Learn more about Made by History at TIME here. Opinions expressed do not necessarily reflect the views of TIME editors.