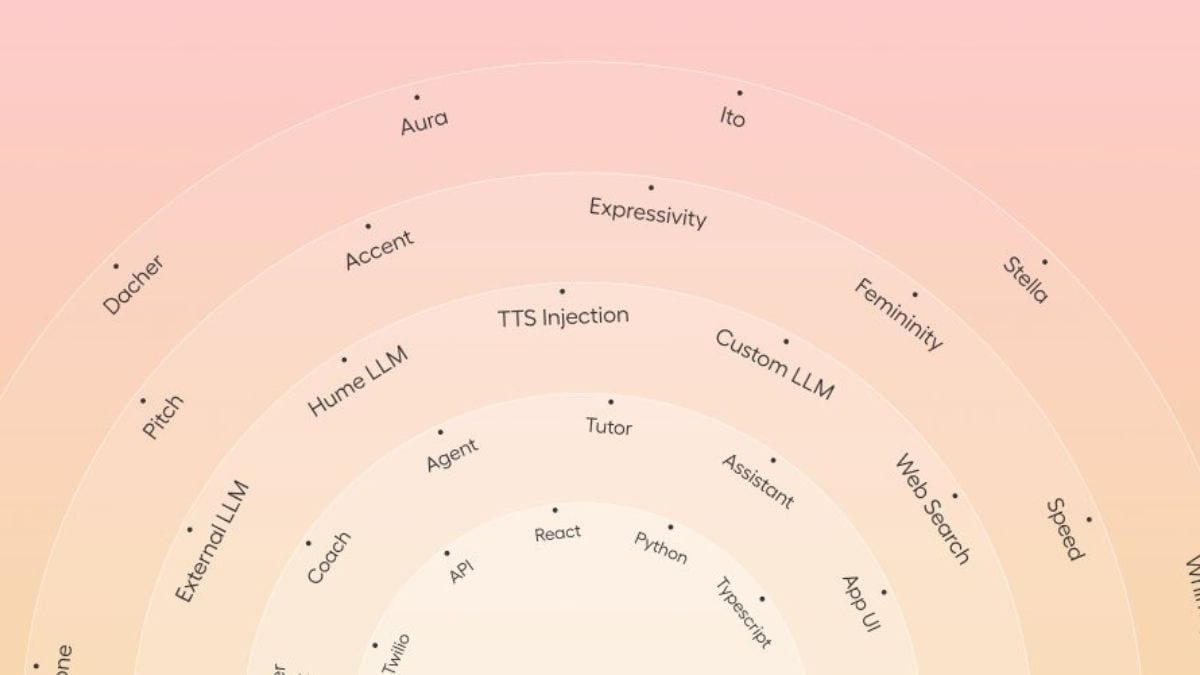

Hume, a New York-based artificial intelligence (AI) firm, unveiled a new tool on Monday that will allow users to customise AI voices. Dubbed Voice Control, the new feature is aimed at helping developers integrate these voices into their chatbots and other AI-based applications. Instead of offering a large range of voices, the company offers granular control over 10 different dimensions of voices. By selecting the desired parameters in each of the dimensions, users can generate unique voices for their apps.

Hume Voice Control Tool

The company detailed the new AI tool in a blog post. Hume stated that it is trying to solve the problem of enterprises finding the right AI voice to match their brand identity. With this feature, users can customise different aspects of the perception of voice and allow developers to create a more assertive, relaxed, or buoyant voice for AI-based applications.

Hume's Voice Control is currently available in beta, but it can be accessed by anyone registered on the platform. Gadgets 360 staff members were able to access the tool and test the feature. There are 10 different dimensions developers can adjust including gender, assertiveness, buoyancy, confidence, enthusiasm, nasality, relaxedness, smoothness, tepidity, and tightness.

Instead of adding a prompt-based customisation, the company has added a slider that goes from -100 to +100 for each of the metrics. The company stated that this approach was taken to eliminate the vagueness associated with the textual description of a voice and to offer granular control over the languages.

In our testing, we found changing any of the ten dimensions makes an audible difference to the AI voice and the tool was able to disentangle the different dimensions correctly. The AI firm claimed that this was achieved by developing a new “unsupervised approach” which preserves most characteristics of each base voice when specific parameters are varied. Notably, Hume did not detail the source of the procured data.

Notably, after creating an AI voice, developers will have to deploy it to the application by configuring its Empathic Voice Interface (EVI) AI model. While the company did not specify, the EVI-2 model was likely used for this experimental feature.

In the future, Hume plans to expand the range of base voices, introduce additional interpretable dimensions, enhance the preservation of voice characteristics under extreme modifications, and develop advanced tools to analyse and visualise voice characteristics.