Oppo on Wednesday announced a new on-device artificial intelligence (AI) feature designed for its smartphones. The firm plans to implement a mixture-of-experts (MoE) AI architecture to substantially reduce energy consumption, while also improving processing speed. Oppo collaborated with leading chipset manufacturers to develop the technology for smartphones and similar devices. However, it did not reveal which upcoming smartphones will be equipped with this technology. The firm recently unveiled ColorOS 15 with new AI features, and its flagship Find X8 series will be launched on October 24.

Oppo Unveils On-Device MoE AI Architecture for Smartphones

The company shared details of its new in-house technology for smartphones. The MoE architecture is not new, and has been popularised by AI firm Mixtral, which has released several open-source MoE-based AI models.

Unlike large language models (LLMs), which have one massive centralised system and can perform a large variety of AI tasks, MoE architecture relies on small language models (SLMs). These SLMs specialise in one particular task and can perform them with higher accuracy and speeds due to smaller but more relevant datasets.

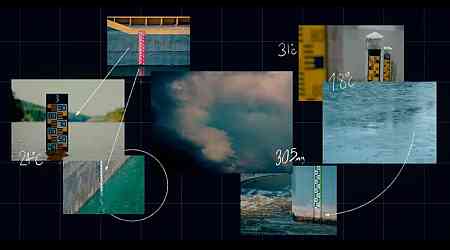

![]()

On-device mixture-of-experts AI architecture implementation

Photo Credit: Oppo

In MoE architecture, multiple SLMs are paired together in the backend and offer a synchronised interface at the frontend. Whenever a user requests a task, the system assigns it to the SLM most capable of handling it. As a result, the system is capable of parallel processing, and despite carrying out multiple tasks simultaneously, the processing speed is not affected significantly.

The parallel processing paired with the usage of SLM also reduces power consumption. Oppo claims that it has found a way to implement this architecture on-device, which would lead to faster AI processing as well as improved battery life.

Based on internal testing, the Chinese smartphone maker claims that MoE-based AI systems can process AI tasks 40 percent faster than the traditional alternative.

Oppo also highlighted that on-device MoE architecture will offer more privacy to users as fewer AI tasks will need to be processed on servers. This would mean the data is stored locally and only the user has access to it.